Animal Keypoints#

This section describes the OpenPifPaf plugin for animals. The plugin uses the Animalpose Dataset that includes 5 categories of animals: dogs, cats, sheeps, horses and cows. For more information, we suggest to check our latest paper:

OpenPifPaf: Composite Fields for Semantic Keypoint Detection and Spatio-Temporal Association

Sven Kreiss, Lorenzo Bertoni, Alexandre Alahi, 2021.

Predict#

Prediction runs as standard openpifpaf predict command; the only difference is the pretrained model.

To download the model, just add --checkpoint shufflenetv2k30-animalpose to the prediction command.

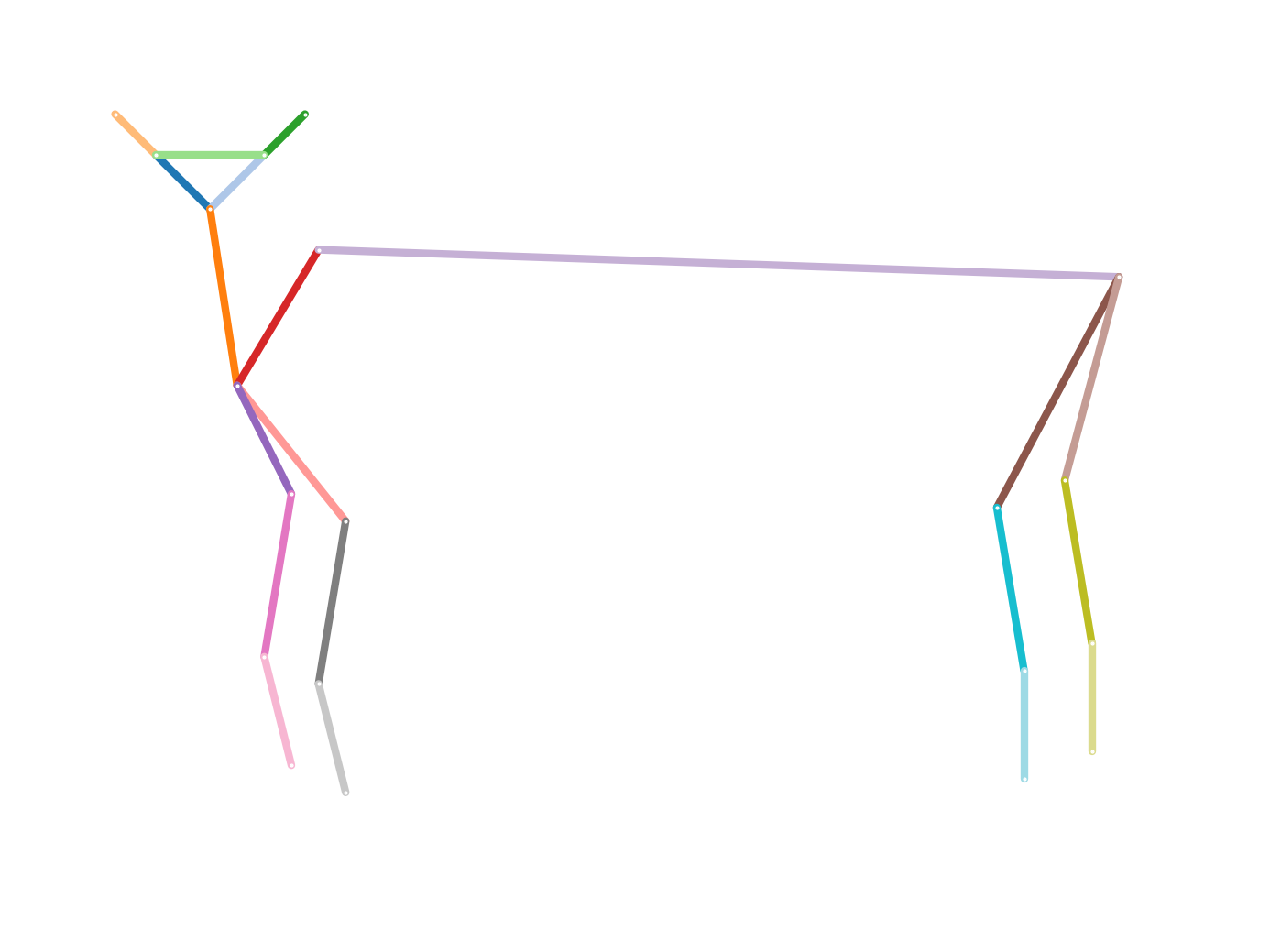

For example on a picture of our researcher Tappo Altotto:

%%bash

python -m openpifpaf.predict images/tappo_loomo.jpg --image-output \

--checkpoint=shufflenetv2k30-animalpose \

--line-width=6 --font-size=6 --white-overlay=0.3 --long-edge=500

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.factory:No specific decoder requested. Using the first one from:

--decoder=cifcaf:0

--decoder=posesimilarity:0

Use any of the above arguments to select one or multiple decoders and to suppress this message.

INFO:openpifpaf.predictor:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.cifcaf:annotations 1: [9]

INFO:openpifpaf.predictor:batch 0: images/tappo_loomo.jpg

src/openpifpaf/csrc/src/cif_hr.cpp:102: UserInfo: resizing cifhr buffer

src/openpifpaf/csrc/src/occupancy.cpp:53: UserInfo: resizing occupancy buffer

import IPython

IPython.display.Image('images/tappo_loomo.jpg.predictions.jpeg')

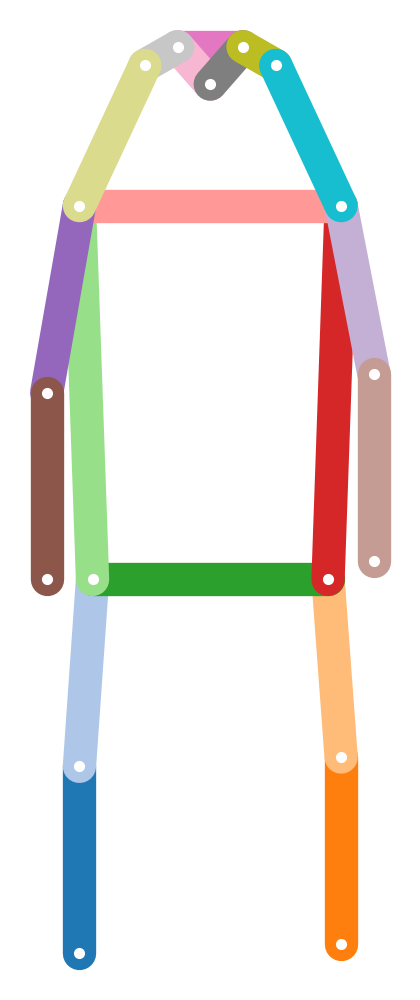

The standard skeleton of an animal is the following:

Show code cell source

# HIDE CODE

# first make an annotation

ann_animal = openpifpaf.Annotation.from_cif_meta(

openpifpaf.plugins.animalpose.animal_kp.AnimalKp().head_metas[0])

# visualize the annotation

openpifpaf.show.KeypointPainter.show_joint_scales = False

openpifpaf.show.KeypointPainter.line_width = 3

keypoint_painter = openpifpaf.show.KeypointPainter()

with openpifpaf.show.Canvas.annotation(ann_animal) as ax:

keypoint_painter.annotation(ax, ann_animal)

Preprocess dataset#

The Animalpose dataset is in a mixed format. Some annotations comes from a collection of keypoints annotations of PASCAL Dataset from UC Berkeley, which the authors of the animalpose dataset have extended. Other annotations have been created by the authors of the dataset. As no datasets split was available, we created a random training/val split with 4000 images for training, and the remaining 600+ for validation.

The following instructions will help with downloading and preprocessing the Animalpose dataset.

mkdir data-animalpose

cd data-animalpose

pip install gdown

gdown https://drive.google.com/uc\?id\=1UkZB-kHg4Eijcb2yRWVN94LuMJtAfjEI

gdown https://drive.google.com/uc\?id\=1zjYczxVd2i8bl6QAqUlasS5LoZcQQu8b

gdown https://drive.google.com/uc\?id\=1MDgiGMHEUY0s6w3h9uP9Ovl7KGQEDKhJ

wget http://host.robots.ox.ac.uk/pascal/VOC/voc2011/VOCtrainval_25-May-2011.tar

tar -xvf keypoint_image_part2.tar.gz

tar -xvf keypoint_anno_part2.tar.gz

tar -xvf keypoint_anno_part1.tar.gz

tar -xvf VOCtrainval_25-May-2011.tar

rm keypoint_*.tar.gz

rm VOCtrainval_25-May-2011.tar

cd ..

After downloading the dataset, run:

python3 -m openpifpaf.plugins.animalpose.scripts.voc_to_coco

Training#

pip install pycocotools

pip install scipy

python3 -m openpifpaf.train \

--lr=0.0003 --momentum=0.95 --clip-grad-value=10.0 --b-scale=10.0 \

--batch-size=16 --loader-workers=12 \

--epochs=400 --lr-decay 360 380 --lr-decay-epochs=10 --val-interval 5 \

--checkpoint=shufflenetv2k30 --lr-warm-up-start-epoch=250 \

--dataset=animal --animal-square-edge=385 --animal-upsample=2 \

--animal-bmin=2 --animal-extended-scale \

--weight-decay=1e-5

Evaluation#

To evaluate our model using the standard COCO metric, we use the following command:

python3 -m openpifpaf.eval \

--dataset=animal --checkpoint=shufflenetv2k30-animalpose \

--batch-size=1 --skip-existing --loader-workers=8 --force-complete-pose \

--seed-threshold=0.01 --instance-threshold=0.01

Everything else#

All PifPaf options and commands still stand, check them in the other sections of the guide. If you are interested in training your own dataset, read the section on a custom dataset.