Prediction#

Use the openpifpaf.predict tool on the command line to run multi-person pose estimation on images. To create predictions from other Python modules, please refer to Prediction API. First we present the command line tool for predictions on images, openpifpaf.predict. Then follows a short introduction to OpenPifPaf predictions on videos with openpifpaf.video.

Images#

Run openpifpaf.predict on an image:

%%bash

python -m openpifpaf.predict coco/000000081988.jpg --image-output --json-output

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.factory:No specific decoder requested. Using the first one from:

--decoder=cifcaf:0

--decoder=posesimilarity:0

Use any of the above arguments to select one or multiple decoders and to suppress this message.

INFO:openpifpaf.predictor:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.cifcaf:annotations 5: [16, 14, 13, 12, 12]

INFO:openpifpaf.predictor:batch 0: coco/000000081988.jpg

src/openpifpaf/csrc/src/cif_hr.cpp:102: UserInfo: resizing cifhr buffer

src/openpifpaf/csrc/src/occupancy.cpp:53: UserInfo: resizing occupancy buffer

This command produced two outputs: an image and a json file.

You can provide file or folder arguments to the --image-output and --json-output flags.

Here, we used the default which created these two files:

coco/000000081988.jpg.predictions.jpeg

coco/000000081988.jpg.predictions.json

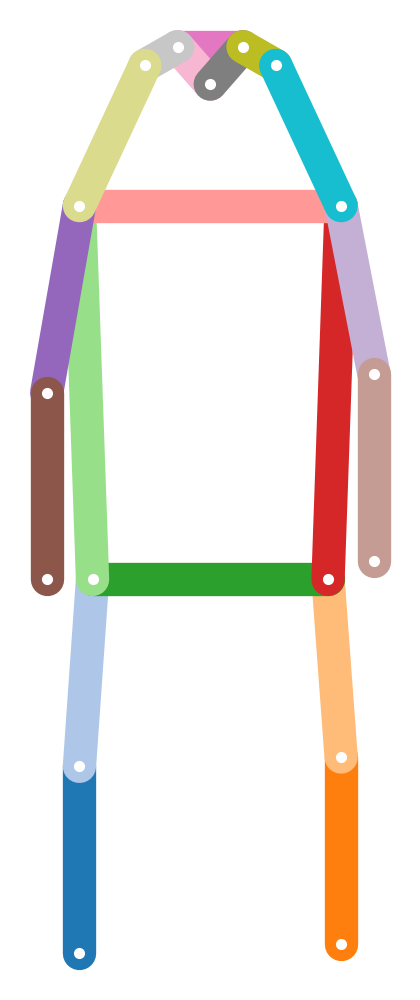

Here is the image:

import IPython

IPython.display.Image('coco/000000081988.jpg.predictions.jpeg')

Image credit: “Learning to surf” by fotologic which is licensed under CC-BY-2.0.

And below is the json output. The json data is a list where each entry in the list corresponds to one pose annotation. In this case, there are five entries corresponding to the five people in the image. Each annotation contains information on "keypoints", "bbox", "score" and "category_id".

All coordinates are in pixel coordinates. The "keypoints" entry is in COCO format with triples of (x, y, c) (c for confidence) for every joint as listed under COCO Person Keypoints. The pixel coordinates have sub-pixel accuracy, i.e. 10.5 means the joint is between pixel 10 and 11.

In rare cases, joints can be localized outside the field of view and then the pixel coordinates can be negative. When c is zero, the joint was not detected.

The "bbox" (bounding box) format is (x, y, w, h): the \((x, y)\) coordinate of the top-left corner followed by width and height.

The "score" is a number between zero and one.

%%bash

python -m json.tool coco/000000081988.jpg.predictions.json

[

{

"keypoints": [

360.18,

299.65,

1.0,

364.04,

294.79,

0.96,

355.07,

295.04,

0.98,

369.58,

297.13,

0.83,

347.77,

298.04,

0.94,

381.79,

317.4,

0.94,

341.23,

321.81,

0.95,

387.61,

341.62,

0.62,

335.08,

350.91,

0.96,

373.34,

356.88,

0.44,

335.82,

363.87,

0.9,

373.8,

362.83,

0.86,

350.46,

364.86,

0.96,

388.86,

361.72,

0.66,

328.08,

374.7,

0.69,

338.47,

381.58,

0.34,

0.0,

-3.0,

0.0

],

"bbox": [

321.09,

292.01,

74.9,

96.17

],

"score": 0.821,

"category_id": 1

},

{

"keypoints": [

81.04,

316.39,

0.8,

84.85,

313.13,

0.88,

79.19,

312.31,

0.67,

99.87,

308.71,

0.96,

0.0,

-3.0,

0.0,

121.95,

317.08,

1.0,

78.87,

322.75,

0.98,

145.26,

347.63,

0.99,

59.34,

349.75,

0.96,

125.94,

353.11,

0.96,

52.33,

381.5,

0.97,

121.69,

359.37,

0.97,

95.56,

363.15,

0.97,

152.58,

360.37,

0.91,

72.03,

367.25,

0.88,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0

],

"bbox": [

45.13,

305.11,

116.65,

83.59

],

"score": 0.819,

"category_id": 1

},

{

"keypoints": [

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0,

388.01,

149.0,

1.0,

410.68,

149.9,

0.95,

376.6,

177.27,

0.98,

425.45,

178.19,

0.98,

337.24,

190.82,

0.98,

463.56,

192.09,

0.98,

301.44,

193.76,

0.98,

495.15,

185.29,

0.97,

389.34,

251.83,

0.97,

414.38,

251.49,

0.98,

384.25,

315.64,

0.82,

409.22,

317.9,

0.96,

0.0,

-3.0,

0.0,

405.56,

367.51,

0.66

],

"bbox": [

289.84,

142.48,

216.49,

234.07

],

"score": 0.788,

"category_id": 1

},

{

"keypoints": [

239.69,

318.88,

0.48,

0.0,

-3.0,

0.0,

235.94,

316.98,

0.68,

0.0,

-3.0,

0.0,

222.3,

314.72,

0.84,

241.28,

320.26,

0.93,

201.98,

316.96,

1.0,

241.98,

351.26,

0.83,

197.04,

349.91,

0.78,

240.17,

378.14,

0.77,

195.31,

375.14,

0.67,

222.78,

339.59,

0.61,

199.07,

340.1,

0.58,

222.55,

374.28,

0.42,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0

],

"bbox": [

190.44,

312.1,

56.65,

70.61

],

"score": 0.614,

"category_id": 1

},

{

"keypoints": [

493.12,

349.29,

0.61,

494.84,

345.06,

0.55,

488.95,

346.07,

0.53,

503.2,

333.74,

0.44,

0.0,

-3.0,

0.0,

522.08,

331.19,

0.76,

492.33,

334.51,

0.55,

549.21,

351.31,

0.65,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0,

0.0,

-3.0,

0.0,

542.72,

344.51,

0.86,

523.54,

346.43,

1.0,

522.31,

377.06,

0.81,

501.11,

378.52,

0.88,

566.24,

384.99,

0.62,

0.0,

-3.0,

0.0

],

"bbox": [

486.3,

325.06,

87.45,

67.44

],

"score": 0.597,

"category_id": 1

}

]

Optional Arguments:

--show: show interactive matplotlib output--debug-indices: enable debug messages and debug plots (see Examples)

Full list of arguments is available with --help: CLI help for predict.

Videos#

python3 -m openpifpaf.video --source myvideotoprocess.mp4 --video-output --json-output

Requires OpenCV. The --video-output option also requires matplotlib.

Replace myvideotoprocess.mp4 with 0 for webcam0 or other OpenCV compatible sources.

The full list of arguments is available with --help: CLI help for video.

In v0.12.6, we introduced the ability to pipe the output to a virtual camera.

This virtual camera can then be used as the source camera in Zoom and other

conferencing softwares. You need a virtual camera on your system, e.g.

from OBS Studio (Mac and Windows) or

v4l2loopback (Linux)

and need to install pip3 install pyvirtualcam. Then you can use the

--video-output=virtualcam argument.

Debug#

Obtain extra information by adding --debug to the command line. It will

show the structure of the neural network and timing information in the decoder.

%%bash

python -m openpifpaf.predict coco/000000081988.jpg --image-output --json-output --debug

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.network.factory:Shell(

(base_net): ShuffleNetV2K(

(input_block): Sequential(

(0): Sequential(

(0): Conv2d(3, 24, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(24, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(stage2): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(24, 24, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=24, bias=False)

(1): BatchNorm2d(24, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(24, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(24, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(stage3): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(348, 348, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=348, bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(4): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(5): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(6): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(7): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(stage4): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(696, 696, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=696, bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(conv5): Sequential(

(0): Conv2d(1392, 1392, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(1392, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(head_nets): ModuleList(

(0): CompositeField4(

(dropout): Dropout2d(p=0.0, inplace=False)

(conv): Conv2d(1392, 340, kernel_size=(1, 1), stride=(1, 1))

(upsample_op): PixelShuffle(upscale_factor=2)

)

(1): CompositeField4(

(dropout): Dropout2d(p=0.0, inplace=False)

(conv): Conv2d(1392, 608, kernel_size=(1, 1), stride=(1, 1))

(upsample_op): PixelShuffle(upscale_factor=2)

)

)

)

DEBUG:openpifpaf.decoder.factory:head names = ['cif', 'caf']

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.visualizer.base:caf: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.signal:subscribe to eval_reset

DEBUG:openpifpaf.decoder.pose_similarity:valid keypoints = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16]

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.visualizer.base:caf: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.decoder.factory:created 2 decoders

INFO:openpifpaf.decoder.factory:No specific decoder requested. Using the first one from:

--decoder=cifcaf:0

--decoder=posesimilarity:0

Use any of the above arguments to select one or multiple decoders and to suppress this message.

INFO:openpifpaf.predictor:neural network device: cpu (CUDA available: False, count: 0)

DEBUG:openpifpaf.transforms.pad:valid area before pad: [ 0. 0. 639. 426.], image size = (640, 427)

DEBUG:openpifpaf.transforms.pad:pad with [0, 3, 1, 3]

DEBUG:openpifpaf.transforms.pad:valid area after pad: [ 0. 3. 639. 426.], image size = (641, 433)

DEBUG:openpifpaf.decoder.decoder:nn processing time: 435.7ms

DEBUG:openpifpaf.decoder.decoder:parallel execution with worker <openpifpaf.decoder.decoder.DummyPool object at 0x7f69cad65dc0>

DEBUG:openpifpaf.decoder.multi:task 0

DEBUG:openpifpaf.decoder.cifcaf:cpp annotations = 5 (7.5ms)

INFO:openpifpaf.decoder.cifcaf:annotations 5: [16, 14, 13, 12, 12]

DEBUG:openpifpaf.decoder.decoder:time: nn = 436.0ms, dec = 8.5ms

INFO:openpifpaf.predictor:batch 0: coco/000000081988.jpg

DEBUG:__main__:json output = coco/000000081988.jpg.predictions.json

DEBUG:__main__:image output = coco/000000081988.jpg.predictions.jpeg

DEBUG:openpifpaf.show.canvas:writing image to coco/000000081988.jpg.predictions.jpeg

src/openpifpaf/csrc/src/cif_hr.cpp:102: UserInfo: resizing cifhr buffer

src/openpifpaf/csrc/src/occupancy.cpp:53: UserInfo: resizing occupancy buffer

You can enable debug plots with --debug-indices.

Please refer to the debug outputs in the Examples and

some further debug outputs in the prediction API.