Cifar10¶

This page gives a quick introduction to OpenPifPaf’s Cifar10 plugin that is part of openpifpaf.plugins.

It demonstrates the plugin architecture.

There already is a nice dataset for CIFAR10 in torchvision and a related PyTorch tutorial.

The plugin adds a DataModule that uses this dataset.

Let’s start with them setup for this notebook and registering all available OpenPifPaf plugins:

print(openpifpaf.plugin.REGISTERED.keys())

dict_keys(['openpifpaf.plugins.apollocar3d', 'openpifpaf.plugins.cifar10', 'openpifpaf.plugins.coco', 'openpifpaf.plugins.crowdpose'])

Next, we configure and instantiate the Cifar10 datamodule and look at the configured head metas:

# configure

openpifpaf.plugins.cifar10.datamodule.Cifar10.debug = True

openpifpaf.plugins.cifar10.datamodule.Cifar10.batch_size = 1

# instantiate and inspect

datamodule = openpifpaf.plugins.cifar10.datamodule.Cifar10()

datamodule.head_metas

datamodule.set_loader_workers(0) # no multi-processing to see debug outputs in main thread

We see here that CIFAR10 is being treated as a detection dataset (CifDet) and has 10 categories.

To create a network, we use the factory() function that takes the name of the base network cifar10net and the list of head metas.

net = openpifpaf.network.Factory(base_name='cifar10net').factory(head_metas=datamodule.head_metas)

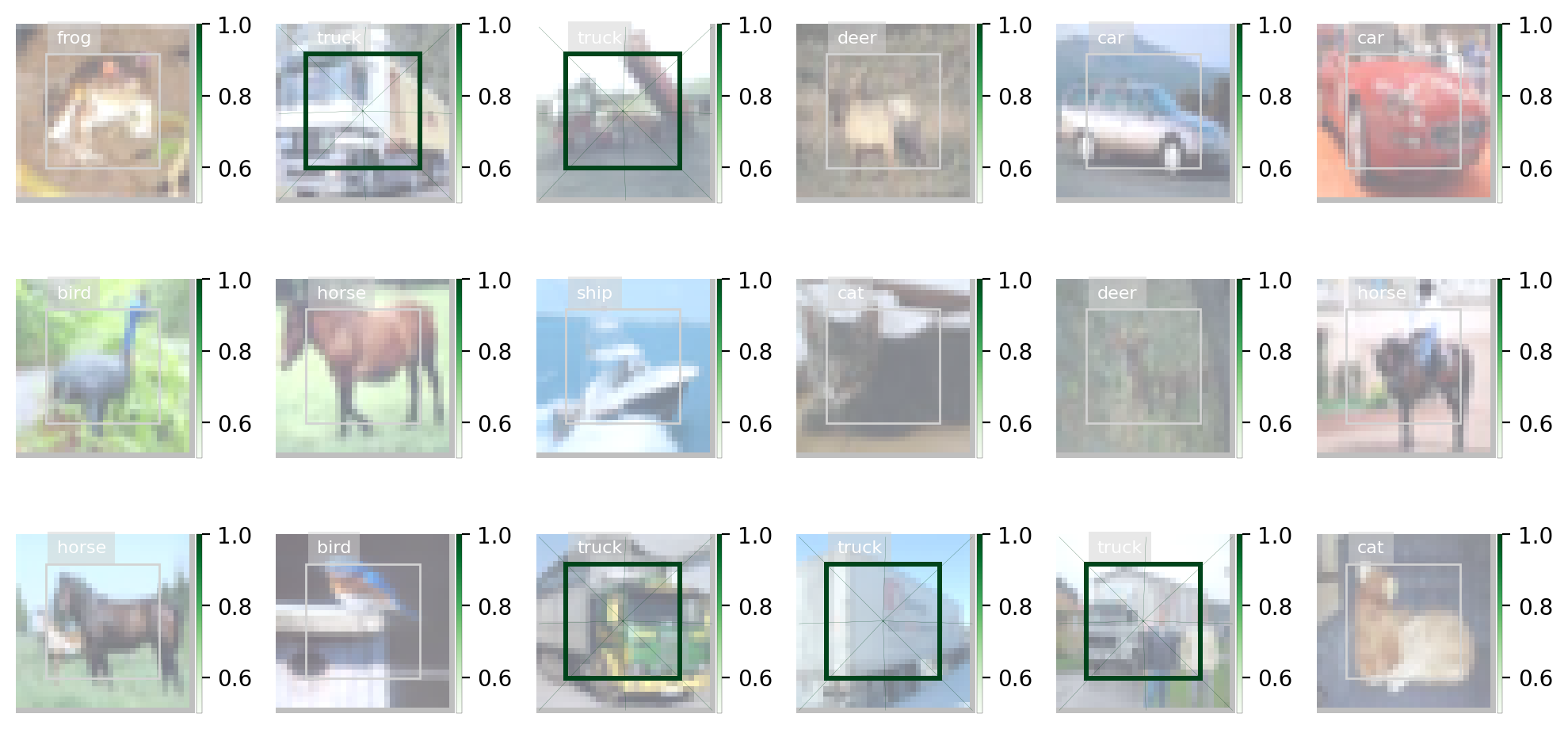

We can inspect the training data that is returned from datamodule.train_loader():

# configure visualization

openpifpaf.visualizer.Base.set_all_indices(['cifdet:9:regression']) # category 9 = truck

# Create a wrapper for a data loader that iterates over a set of matplotlib axes.

# The only purpose is to set a different matplotlib axis before each call to

# retrieve the next image from the data_loader so that it produces multiple

# debug images in one canvas side-by-side.

def loop_over_axes(axes, data_loader):

previous_common_ax = openpifpaf.visualizer.Base.common_ax

train_loader_iter = iter(data_loader)

for ax in axes.reshape(-1):

openpifpaf.visualizer.Base.common_ax = ax

yield next(train_loader_iter, None)

openpifpaf.visualizer.Base.common_ax = previous_common_ax

# create a canvas and loop over the first few entries in the training data

with openpifpaf.show.canvas(ncols=6, nrows=3, figsize=(10, 5)) as axs:

for images, targets, meta in loop_over_axes(axs, datamodule.train_loader()):

pass

Training¶

We train a very small network, cifar10net, for only one epoch. Afterwards, we will investigate its predictions.

%%bash

python -m openpifpaf.train --dataset=cifar10 --basenet=cifar10net --epochs=1 --log-interval=500 --lr-warm-up-epochs=0.1 --lr=3e-3 --batch-size=16 --loader-workers=2 --output=cifar10_tutorial.pkl

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.network.basenetworks:cifar10net: stride = 16, output features = 128

INFO:openpifpaf.network.losses.multi_head:multihead loss: ['cifar10.cifdet.c', 'cifar10.cifdet.vec1', 'cifar10.cifdet.vec2'], [1.0, 1.0, 1.0]

INFO:openpifpaf.logger:{'type': 'process', 'argv': ['/opt/hostedtoolcache/Python/3.7.10/x64/lib/python3.7/site-packages/openpifpaf/train.py', '--dataset=cifar10', '--basenet=cifar10net', '--epochs=1', '--log-interval=500', '--lr-warm-up-epochs=0.1', '--lr=3e-3', '--batch-size=16', '--loader-workers=2', '--output=cifar10_tutorial.pkl'], 'args': {'output': 'cifar10_tutorial.pkl', 'disable_cuda': False, 'ddp': False, 'local_rank': None, 'sync_batchnorm': True, 'quiet': False, 'debug': False, 'log_stats': False, 'resnet_pretrained': True, 'resnet_pool0_stride': 0, 'resnet_input_conv_stride': 2, 'resnet_input_conv2_stride': 0, 'resnet_block5_dilation': 1, 'resnet_remove_last_block': False, 'shufflenetv2k_input_conv2_stride': 0, 'shufflenetv2k_input_conv2_outchannels': None, 'shufflenetv2k_stage4_dilation': 1, 'shufflenetv2k_kernel': 5, 'shufflenetv2k_conv5_as_stage': False, 'shufflenetv2k_instance_norm': False, 'shufflenetv2k_group_norm': False, 'shufflenetv2k_leaky_relu': False, 'mobilenetv2_pretrained': True, 'squeezenet_pretrained': True, 'shufflenetv2_pretrained': True, 'cf3_dropout': 0.0, 'cf3_inplace_ops': True, 'checkpoint': None, 'basenet': 'cifar10net', 'cross_talk': 0.0, 'download_progress': True, 'head_consolidation': 'filter_and_extend', 'lambdas': None, 'component_lambdas': None, 'auto_tune_mtl': False, 'auto_tune_mtl_variance': False, 'task_sparsity_weight': 0.0, 'loss_prescale': 1.0, 'regression_loss': 'laplace', 'background_weight': 1.0, 'focal_alpha': 0.5, 'focal_gamma': 1.0, 'focal_detach': False, 'focal_clamp': True, 'bce_min': 0.0, 'bce_soft_clamp': 5.0, 'r_smooth': 0.0, 'laplace_soft_clamp': 5.0, 'b_scale': 1.0, 'scale_log': False, 'scale_soft_clamp': 5.0, 'epochs': 1, 'train_batches': None, 'val_batches': None, 'clip_grad_norm': 0.0, 'clip_grad_value': 0.0, 'log_interval': 500, 'val_interval': 1, 'stride_apply': 1, 'fix_batch_norm': False, 'ema': 0.01, 'profile': None, 'cif_side_length': 4, 'caf_min_size': 3, 'caf_fixed_size': False, 'caf_aspect_ratio': 0.0, 'encoder_suppress_selfhidden': True, 'momentum': 0.9, 'beta2': 0.999, 'adam_eps': 1e-06, 'nesterov': True, 'weight_decay': 0.0, 'adam': False, 'amsgrad': False, 'lr': 0.003, 'lr_decay': [], 'lr_decay_factor': 0.1, 'lr_decay_epochs': 1.0, 'lr_warm_up_start_epoch': 0, 'lr_warm_up_epochs': 0.1, 'lr_warm_up_factor': 0.001, 'lr_warm_restarts': [], 'lr_warm_restart_duration': 0.5, 'dataset': 'cifar10', 'loader_workers': 2, 'batch_size': 16, 'dataset_weights': None, 'apollo_train_annotations': 'data/apollo-coco/annotations/apollo_keypoints_66_train.json', 'apollo_val_annotations': 'data/apollo-coco/annotations/apollo_keypoints_66_val.json', 'apollo_train_image_dir': 'data/apollo-coco/images/train/', 'apollo_val_image_dir': 'data/apollo-coco/images/val/', 'apollo_square_edge': 513, 'apollo_extended_scale': False, 'apollo_orientation_invariant': 0.0, 'apollo_blur': 0.0, 'apollo_augmentation': True, 'apollo_rescale_images': 1.0, 'apollo_upsample': 1, 'apollo_min_kp_anns': 1, 'apollo_bmin': 1, 'apollo_eval_annotation_filter': True, 'apollo_eval_long_edge': 0, 'apollo_eval_extended_scale': False, 'apollo_eval_orientation_invariant': 0.0, 'apollo_use_24_kps': False, 'cifar10_root_dir': 'data-cifar10/', 'cifar10_download': False, 'cocodet_train_annotations': 'data-mscoco/annotations/instances_train2017.json', 'cocodet_val_annotations': 'data-mscoco/annotations/instances_val2017.json', 'cocodet_train_image_dir': 'data-mscoco/images/train2017/', 'cocodet_val_image_dir': 'data-mscoco/images/val2017/', 'cocodet_square_edge': 513, 'cocodet_extended_scale': False, 'cocodet_orientation_invariant': 0.0, 'cocodet_blur': 0.0, 'cocodet_augmentation': True, 'cocodet_rescale_images': 1.0, 'cocodet_upsample': 1, 'cocokp_train_annotations': 'data-mscoco/annotations/person_keypoints_train2017.json', 'cocokp_val_annotations': 'data-mscoco/annotations/person_keypoints_val2017.json', 'cocokp_train_image_dir': 'data-mscoco/images/train2017/', 'cocokp_val_image_dir': 'data-mscoco/images/val2017/', 'cocokp_square_edge': 385, 'cocokp_with_dense': False, 'cocokp_extended_scale': False, 'cocokp_orientation_invariant': 0.0, 'cocokp_blur': 0.0, 'cocokp_augmentation': True, 'cocokp_rescale_images': 1.0, 'cocokp_upsample': 1, 'cocokp_min_kp_anns': 1, 'cocokp_bmin': 0.1, 'cocokp_eval_test2017': False, 'cocokp_eval_testdev2017': False, 'coco_eval_annotation_filter': True, 'coco_eval_long_edge': 641, 'coco_eval_extended_scale': False, 'coco_eval_orientation_invariant': 0.0, 'crowdpose_train_annotations': 'data-crowdpose/json/crowdpose_train.json', 'crowdpose_val_annotations': 'data-crowdpose/json/crowdpose_val.json', 'crowdpose_image_dir': 'data-crowdpose/images/', 'crowdpose_square_edge': 385, 'crowdpose_extended_scale': False, 'crowdpose_orientation_invariant': 0.0, 'crowdpose_augmentation': True, 'crowdpose_rescale_images': 1.0, 'crowdpose_upsample': 1, 'crowdpose_min_kp_anns': 1, 'crowdpose_eval_test': False, 'crowdpose_eval_long_edge': 641, 'crowdpose_eval_extended_scale': False, 'crowdpose_eval_orientation_invariant': 0.0, 'crowdpose_index': None, 'save_all': None, 'show': False, 'image_width': None, 'image_height': None, 'image_dpi_factor': 1.0, 'image_min_dpi': 50.0, 'show_file_extension': 'png', 'textbox_alpha': 0.5, 'text_color': 'white', 'font_size': 8, 'monocolor_connections': False, 'line_width': None, 'skeleton_solid_threshold': 0.5, 'show_box': False, 'white_overlay': False, 'show_joint_scales': False, 'show_joint_confidences': False, 'show_decoding_order': False, 'show_frontier_order': False, 'show_only_decoded_connections': False, 'video_fps': 10, 'video_dpi': 100, 'debug_indices': [], 'device': device(type='cpu'), 'pin_memory': False}, 'version': '0.12.7+1.g0d67fa9', 'plugin_versions': {}, 'hostname': 'fv-az272-9'}

INFO:openpifpaf.optimize:SGD optimizer

INFO:openpifpaf.optimize:training batches per epoch = 3125

INFO:openpifpaf.network.trainer:{'type': 'config', 'field_names': ['cifar10.cifdet.c', 'cifar10.cifdet.vec1', 'cifar10.cifdet.vec2']}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch000

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 0, 'n_batches': 3125, 'time': 0.05, 'data_time': 0.071, 'lr': 3e-06, 'loss': 44.842, 'head_losses': [15.502, 11.609, 17.73]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 500, 'n_batches': 3125, 'time': 0.034, 'data_time': 0.006, 'lr': 0.003, 'loss': 17.137, 'head_losses': [7.572, 4.783, 4.781]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1000, 'n_batches': 3125, 'time': 0.034, 'data_time': 0.002, 'lr': 0.003, 'loss': 16.717, 'head_losses': [7.135, 4.79, 4.792]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 1500, 'n_batches': 3125, 'time': 0.034, 'data_time': 0.002, 'lr': 0.003, 'loss': 16.361, 'head_losses': [6.757, 4.801, 4.803]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2000, 'n_batches': 3125, 'time': 0.033, 'data_time': 0.001, 'lr': 0.003, 'loss': 16.237, 'head_losses': [6.656, 4.791, 4.789]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 2500, 'n_batches': 3125, 'time': 0.035, 'data_time': 0.002, 'lr': 0.003, 'loss': 15.426, 'head_losses': [5.836, 4.797, 4.793]}

INFO:openpifpaf.network.trainer:{'type': 'train', 'epoch': 0, 'batch': 3000, 'n_batches': 3125, 'time': 0.034, 'data_time': 0.002, 'lr': 0.003, 'loss': 16.147, 'head_losses': [6.563, 4.794, 4.79]}

INFO:openpifpaf.network.trainer:applying ema

INFO:openpifpaf.network.trainer:{'type': 'train-epoch', 'epoch': 1, 'loss': 18.27662, 'head_losses': [7.25327, 5.32942, 5.69393], 'time': 111.8, 'n_clipped_grad': 0, 'max_norm': 0.0}

INFO:openpifpaf.network.trainer:model written: cifar10_tutorial.pkl.epoch001

INFO:openpifpaf.network.trainer:{'type': 'val-epoch', 'epoch': 1, 'loss': 15.60351, 'head_losses': [6.02964, 4.78945, 4.78442], 'time': 15.4}

Plot Training Logs¶

You can create a set of plots from the command line with python -m openpifpaf.logs cifar10_tutorial.pkl.log. You can also overlay multiple runs. Below we call the plotting code from that command directly to show the output in this notebook.

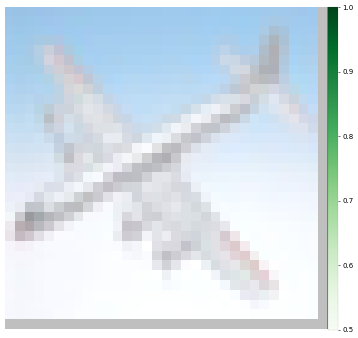

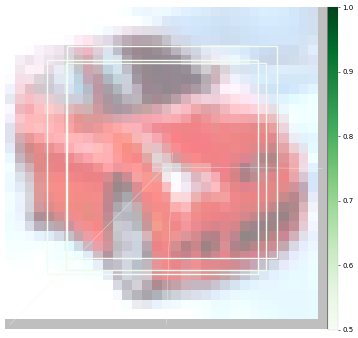

Prediction¶

First using CLI:

%%bash

python -m openpifpaf.predict --checkpoint cifar10_tutorial.pkl.epoch001 images/cifar10_*.png --seed-threshold=0.1 --json-output .

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

WARNING:openpifpaf.decoder.cifcaf:consistency: decreasing keypoint threshold to seed threshold of 0.100000

INFO:openpifpaf.decoder.cifdet:annotations 1, decoder = 0.002s

INFO:__main__:batch 0: images/cifar10_airplane4.png

INFO:openpifpaf.decoder.cifdet:annotations 1, decoder = 0.001s

INFO:__main__:batch 1: images/cifar10_automobile10.png

INFO:openpifpaf.decoder.cifdet:annotations 1, decoder = 0.001s

INFO:__main__:batch 2: images/cifar10_ship7.png

INFO:openpifpaf.decoder.cifdet:annotations 1, decoder = 0.001s

INFO:__main__:batch 3: images/cifar10_truck8.png

%%bash

cat cifar10_*.json

[{"category_id": 1, "category": "plane", "score": 0.218, "bbox": [5.15, 4.51, 20.64, 21.18]}][{"category_id": 2, "category": "car", "score": 0.308, "bbox": [5.18, 5.46, 21.09, 21.54]}][{"category_id": 9, "category": "ship", "score": 0.226, "bbox": [4.97, 4.65, 20.96, 21.16]}][{"category_id": 10, "category": "truck", "score": 0.214, "bbox": [5.29, 5.29, 20.78, 20.77]}]

Using API:

net_cpu, _ = openpifpaf.network.Factory(checkpoint='cifar10_tutorial.pkl.epoch001').factory()

preprocess = openpifpaf.transforms.Compose([

openpifpaf.transforms.NormalizeAnnotations(),

openpifpaf.transforms.CenterPadTight(16),

openpifpaf.transforms.EVAL_TRANSFORM,

])

openpifpaf.decoder.utils.CifDetSeeds.threshold = 0.1

openpifpaf.decoder.utils.nms.Detection.instance_threshold = 0.1

decode = openpifpaf.decoder.factory([hn.meta for hn in net_cpu.head_nets])

data = openpifpaf.datasets.ImageList([

'images/cifar10_airplane4.png',

'images/cifar10_automobile10.png',

'images/cifar10_ship7.png',

'images/cifar10_truck8.png',

], preprocess=preprocess)

for image, _, meta in data:

predictions = decode.batch(net_cpu, image.unsqueeze(0))[0]

print(['{} {:.0%}'.format(pred.category, pred.score) for pred in predictions])

['plane 22%']

['car 31%']

['ship 23%']

['truck 21%']

Evaluation¶

I selected the above images, because their category is clear to me. There are images in cifar10 where it is more difficult to tell what the category is and so it is probably also more difficult for a neural network.

Therefore, we should run a proper quantitative evaluation with openpifpaf.eval. It stores its output as a json file, so we print that afterwards.

%%bash

python -m openpifpaf.eval --checkpoint cifar10_tutorial.pkl.epoch001 --dataset=cifar10 --seed-threshold=0.1 --instance-threshold=0.1 --quiet

[INFO] Register count_convNd() for <class 'torch.nn.modules.conv.Conv2d'>.

[WARN] Cannot find rule for <class 'openpifpaf.plugins.cifar10.basenet.Cifar10Net'>. Treat it as zero Macs and zero Params.

[WARN] Cannot find rule for <class 'torch.nn.modules.dropout.Dropout2d'>. Treat it as zero Macs and zero Params.

[WARN] Cannot find rule for <class 'openpifpaf.network.heads.CompositeField3'>. Treat it as zero Macs and zero Params.

[WARN] Cannot find rule for <class 'torch.nn.modules.container.ModuleList'>. Treat it as zero Macs and zero Params.

[WARN] Cannot find rule for <class 'openpifpaf.network.nets.Shell'>. Treat it as zero Macs and zero Params.

WARNING:openpifpaf.decoder.cifcaf:consistency: decreasing keypoint threshold to seed threshold of 0.100000

%%bash

python -m json.tool cifar10_tutorial.pkl.epoch001.eval-cifar10.stats.json

{

"text_labels": [

"total",

"plane",

"car",

"bird",

"cat",

"deer",

"dog",

"frog",

"horse",

"ship",

"truck"

],

"stats": [

0.4376,

0.416,

0.629,

0.17,

0.25,

0.35,

0.338,

0.477,

0.562,

0.676,

0.508

],

"args": [

"/opt/hostedtoolcache/Python/3.7.10/x64/lib/python3.7/site-packages/openpifpaf/eval.py",

"--checkpoint",

"cifar10_tutorial.pkl.epoch001",

"--dataset=cifar10",

"--seed-threshold=0.1",

"--instance-threshold=0.1",

"--quiet"

],

"version": "0.12.7+1.g0d67fa9",

"dataset": "cifar10",

"total_time": 47.953648805618286,

"checkpoint": "cifar10_tutorial.pkl.epoch001",

"count_ops": [

427119430.0,

106470.0

],

"file_size": 438537,

"n_images": 10000,

"decoder_time": 16.370033962998264,

"nn_time": 22.255049173008047

}

We see that some categories like “plane”, “car” and “ship” are learned quickly whereas as others are learned poorly (e.g. “bird”). The poor performance is not surprising as we trained our network for a single epoch only.