Performance

On this page

Performance#

Profile Decoder#

The default Python and Cython decoder can be profiled with Python’s standard cprofile. The output can be a sorted table and a flame graph. Both are generated below:

%%bash

python -m openpifpaf.predict coco/000000081988.jpg --no-download-progress --debug --profile-decoder

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.network.factory:Shell(

(base_net): ShuffleNetV2K(

(input_block): Sequential(

(0): Sequential(

(0): Conv2d(3, 24, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(1): BatchNorm2d(24, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(stage2): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(24, 24, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=24, bias=False)

(1): BatchNorm2d(24, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(24, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(24, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(174, 174, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=174, bias=False)

(4): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(174, 174, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(174, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(stage3): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(348, 348, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=348, bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(4): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(5): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(6): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(7): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(348, 348, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=348, bias=False)

(4): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(348, 348, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(348, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(stage4): Sequential(

(0): InvertedResidualK(

(branch1): Sequential(

(0): Conv2d(696, 696, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=696, bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(3): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(4): ReLU(inplace=True)

)

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(2, 2), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(1): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(2): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

(3): InvertedResidualK(

(branch2): Sequential(

(0): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(696, 696, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=696, bias=False)

(4): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(5): Conv2d(696, 696, kernel_size=(1, 1), stride=(1, 1), bias=False)

(6): BatchNorm2d(696, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(7): ReLU(inplace=True)

)

)

)

(conv5): Sequential(

(0): Conv2d(1392, 1392, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(1392, eps=0.001, momentum=0.01, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

)

)

(head_nets): ModuleList(

(0): CompositeField4(

(dropout): Dropout2d(p=0.0, inplace=False)

(conv): Conv2d(1392, 340, kernel_size=(1, 1), stride=(1, 1))

(upsample_op): PixelShuffle(upscale_factor=2)

)

(1): CompositeField4(

(dropout): Dropout2d(p=0.0, inplace=False)

(conv): Conv2d(1392, 608, kernel_size=(1, 1), stride=(1, 1))

(upsample_op): PixelShuffle(upscale_factor=2)

)

)

)

DEBUG:openpifpaf.decoder.factory:head names = ['cif', 'caf']

DEBUG:openpifpaf.signal:subscribe to eval_reset

DEBUG:openpifpaf.decoder.pose_similarity:valid keypoints = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16]

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.visualizer.base:caf: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.visualizer.base:cif: indices = []

DEBUG:openpifpaf.visualizer.base:caf: indices = []

DEBUG:openpifpaf.show.painters:color connections = True, lw = 2, marker = 6

DEBUG:openpifpaf.show.painters:color connections = False, lw = 6, marker = 3

DEBUG:openpifpaf.decoder.factory:created 2 decoders

INFO:openpifpaf.decoder.factory:No specific decoder requested. Using the first one from:

--decoder=cifcaf:0

--decoder=posesimilarity:0

Use any of the above arguments to select one or multiple decoders and to suppress this message.

INFO:openpifpaf.predictor:neural network device: cpu (CUDA available: False, count: 0)

DEBUG:openpifpaf.transforms.pad:valid area before pad: [ 0. 0. 639. 426.], image size = (640, 427)

DEBUG:openpifpaf.transforms.pad:pad with [0, 3, 1, 3]

DEBUG:openpifpaf.transforms.pad:valid area after pad: [ 0. 3. 639. 426.], image size = (641, 433)

DEBUG:openpifpaf.decoder.decoder:nn processing time: 752.8ms

DEBUG:openpifpaf.decoder.decoder:parallel execution with worker <openpifpaf.decoder.decoder.DummyPool object at 0x7ff66aa51ca0>

DEBUG:openpifpaf.decoder.multi:task 0

DEBUG:openpifpaf.decoder.cifcaf:cpp annotations = 5 (12.7ms)

INFO:openpifpaf.decoder.cifcaf:annotations 5: [16, 14, 13, 12, 12]

INFO:openpifpaf.profiler:writing profile file profile_decoder.prof

386 function calls in 0.015 seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

1 0.013 0.013 0.015 0.015 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/decoder/cifcaf.py:224(__call__)

5 0.000 0.000 0.000 0.000 {method 'tolist' of 'numpy.ndarray' objects}

5 0.000 0.000 0.001 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/annotation.py:17(__init__)

10 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/numpy/core/fromnumeric.py:2188(sum)

15 0.000 0.000 0.000 0.000 {method 'numpy' of 'torch._C._TensorBase' objects}

10 0.000 0.000 0.000 0.000 {method 'reduce' of 'numpy.ufunc' objects}

10 0.000 0.000 0.000 0.000 {built-in method numpy.asarray}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:288(__init__)

2 0.000 0.000 0.000 0.000 {method 'flush' of '_io.TextIOWrapper' objects}

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/cif.py:47(predicted)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/decoder/cifcaf.py:276(<listcomp>)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1689(isEnabledFor)

10 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/numpy/core/fromnumeric.py:69(_wrapreduction)

2 0.000 0.000 0.000 0.000 {method 'unbind' of 'torch._C._TensorBase' objects}

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/caf.py:47(predicted)

15 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/_tensor.py:952(__array__)

10 0.000 0.000 0.000 0.000 {built-in method numpy.zeros}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:364(getMessage)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/_tensor.py:890(__len__)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/torch/_tensor.py:906(__iter__)

5 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/base.py:114(indices)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1514(findCaller)

10 0.000 0.000 0.000 0.000 <__array_function__ internals>:177(sum)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/posixpath.py:140(basename)

10 0.000 0.000 0.000 0.000 {built-in method numpy.core._multiarray_umath.implement_array_function}

24 0.000 0.000 0.000 0.000 {built-in method builtins.len}

19 0.000 0.000 0.000 0.000 {built-in method builtins.isinstance}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1073(emit)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1565(_log)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1645(callHandlers)

3 0.000 0.000 0.000 0.000 {built-in method torch._C._get_tracing_state}

15 0.000 0.000 0.000 0.000 {method 'astype' of 'numpy.ndarray' objects}

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1424(debug)

10 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/numpy/core/fromnumeric.py:70(<dictcomp>)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1062(flush)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:655(format)

16 0.000 0.000 0.000 0.000 {built-in method torch._C._has_torch_function_unary}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1550(makeRecord)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/posixpath.py:117(splitext)

2 0.000 0.000 0.000 0.000 {built-in method posix.getpid}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:435(_format)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:941(handle)

6 0.000 0.000 0.000 0.000 {method 'acquire' of '_thread.RLock' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:160(<lambda>)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:218(_acquireLock)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/genericpath.py:121(_splitext)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1436(info)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/cifhr.py:17(predicted)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1591(handle)

6 0.000 0.000 0.000 0.000 {method 'rfind' of 'str' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:427(usesTime)

4 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:796(filter)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/posixpath.py:52(normcase)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:119(getLevelName)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:918(format)

10 0.000 0.000 0.000 0.000 {method 'items' of 'dict' objects}

4 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:898(acquire)

6 0.000 0.000 0.000 0.000 {built-in method builtins.hasattr}

4 0.000 0.000 0.000 0.000 {method 'get' of 'dict' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:639(formatMessage)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/cif.py:54(_confidences)

4 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:905(release)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1675(getEffectiveLevel)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:227(_releaseLock)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:438(format)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:633(usesTime)

10 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/numpy/core/fromnumeric.py:2183(_sum_dispatcher)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/headmeta.py:26(stride)

3 0.000 0.000 0.000 0.000 {method 'dim' of 'torch._C._TensorBase' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/threading.py:1306(current_thread)

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/caf.py:54(_confidences)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/multiprocessing/process.py:189(name)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/threading.py:1031(name)

2 0.000 0.000 0.000 0.000 {method 'find' of 'str' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/posixpath.py:41(_get_sep)

5 0.000 0.000 0.000 0.000 {method 'append' of 'list' objects}

2 0.000 0.000 0.000 0.000 {built-in method time.perf_counter}

2 0.000 0.000 0.000 0.000 {built-in method sys._getframe}

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/cif.py:63(_regressions)

6 0.000 0.000 0.000 0.000 {built-in method posix.fspath}

1 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/caf.py:65(_regressions)

5 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/site-packages/openpifpaf/visualizer/base.py:118(<listcomp>)

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/multiprocessing/process.py:37(current_process)

6 0.000 0.000 0.000 0.000 {method 'release' of '_thread.RLock' objects}

2 0.000 0.000 0.000 0.000 {method 'write' of '_io.TextIOWrapper' objects}

2 0.000 0.000 0.000 0.000 /opt/hostedtoolcache/Python/3.8.16/x64/lib/python3.8/logging/__init__.py:1276(disable)

4 0.000 0.000 0.000 0.000 {built-in method _thread.get_ident}

2 0.000 0.000 0.000 0.000 {built-in method time.time}

2 0.000 0.000 0.000 0.000 {built-in method builtins.iter}

1 0.000 0.000 0.000 0.000 {method 'disable' of '_lsprof.Profiler' objects}

DEBUG:openpifpaf.decoder.decoder:time: nn = 753.1ms, dec = 16.8ms

INFO:openpifpaf.predictor:batch 0: coco/000000081988.jpg

src/openpifpaf/csrc/src/cif_hr.cpp:102: UserInfo: resizing cifhr buffer

src/openpifpaf/csrc/src/occupancy.cpp:53: UserInfo: resizing occupancy buffer

!flameprof profile_decoder.prof > profile_decoder_flame.svg

There is a second output that is generated from the Autograd Profiler. This can only be viewed in the Chrome browser:

open

chrome://tracingclick “Load” in the top left corner

select

decoder_profile.1.json

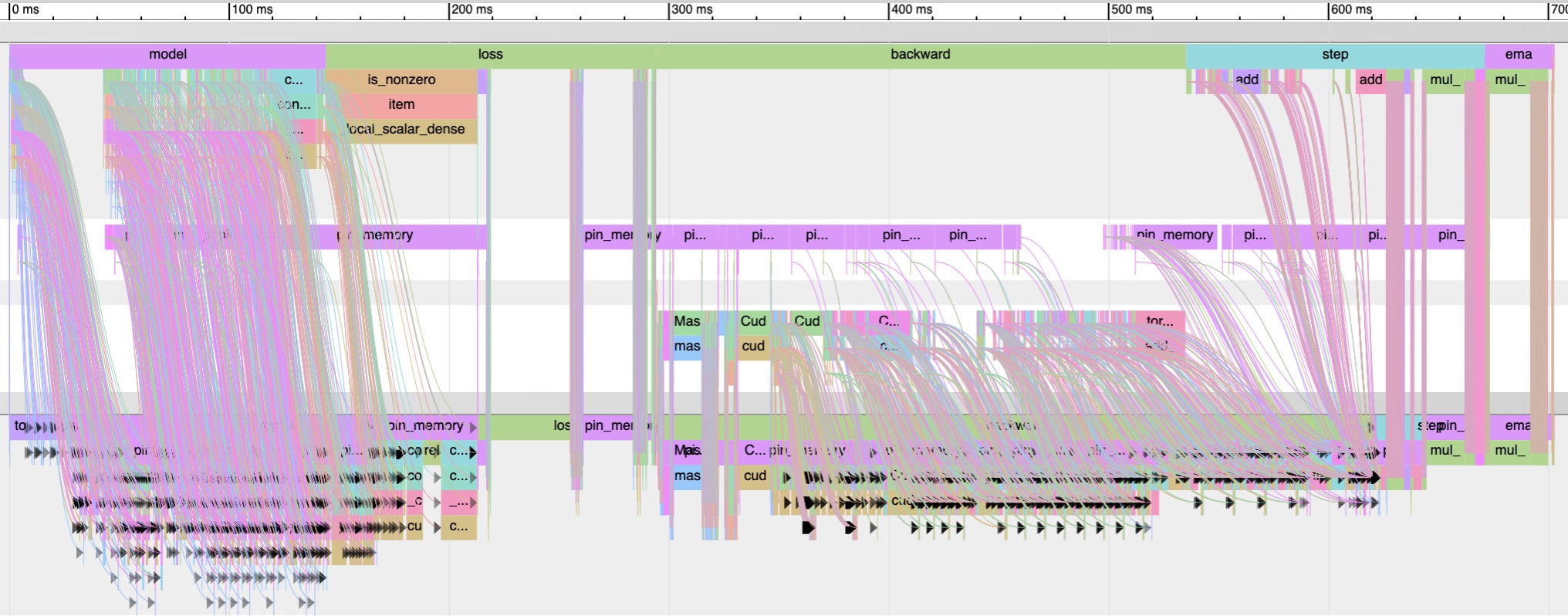

This is the same type of plot that is used to trace the training of a batch. An example of such a plot is shown below.

Profile Training#

For a training batch, the Chrome trace looks like this: