CrowdPose

On this page

CrowdPose#

More info on the CrowdPose dataset: arxiv.org/abs/1812.00324, github.com/Jeff-sjtu/CrowdPose.

This page gives a quick introduction to OpenPifPaf’s CrowdPose plugin that is part of openpifpaf.plugins.

The plugin adds a DataModule. CrowdPose annotations are COCO-compatible, so this datamodule only has to configure the existing COCO dataset class.

This plugin is quite small and might serve as a template for your custom plugin for other COCO-compatible datasets.

Let’s start with the setup for this notebook and register all available OpenPifPaf plugins:

print(openpifpaf.plugin.REGISTERED.keys())

dict_keys(['openpifpaf.plugins.animalpose', 'openpifpaf.plugins.apollocar3d', 'openpifpaf.plugins.cifar10', 'openpifpaf.plugins.coco', 'openpifpaf.plugins.crowdpose', 'openpifpaf.plugins.nuscenes', 'openpifpaf.plugins.posetrack', 'openpifpaf.plugins.wholebody', 'openpifpaf_extras'])

Inspect#

Next, we configure and instantiate the datamodule and look at the configured head metas:

datamodule = openpifpaf.plugins.crowdpose.CrowdPose()

print(datamodule.head_metas)

[Cif(name='cif', dataset='crowdpose', head_index=None, base_stride=None, upsample_stride=1, keypoints=['left_shoulder', 'right_shoulder', 'left_elbow', 'right_elbow', 'left_wrist', 'right_wrist', 'left_hip', 'right_hip', 'left_knee', 'right_knee', 'left_ankle', 'right_ankle', 'head', 'neck'], sigmas=[0.079, 0.079, 0.072, 0.072, 0.062, 0.062, 0.107, 0.107, 0.087, 0.087, 0.089, 0.089, 0.079, 0.079], pose=array([[-1.4 , 8. , 2. ],

[ 1.4 , 8. , 2. ],

[-1.75, 6. , 2. ],

[ 1.75, 6.2 , 2. ],

[-1.75, 4. , 2. ],

[ 1.75, 4.2 , 2. ],

[-1.26, 4. , 2. ],

[ 1.26, 4. , 2. ],

[-1.4 , 2. , 2. ],

[ 1.4 , 2.1 , 2. ],

[-1.4 , 0. , 2. ],

[ 1.4 , 0.1 , 2. ],

[ 0. , 10.3 , 2. ],

[ 0. , 9.3 , 2. ]]), draw_skeleton=[[13, 14], [14, 1], [14, 2], [1, 2], [7, 8], [1, 3], [3, 5], [2, 4], [4, 6], [1, 7], [2, 8], [7, 9], [9, 11], [8, 10], [10, 12]], score_weights=None, decoder_seed_mask=None, training_weights=None), Caf(name='caf', dataset='crowdpose', head_index=None, base_stride=None, upsample_stride=1, keypoints=['left_shoulder', 'right_shoulder', 'left_elbow', 'right_elbow', 'left_wrist', 'right_wrist', 'left_hip', 'right_hip', 'left_knee', 'right_knee', 'left_ankle', 'right_ankle', 'head', 'neck'], sigmas=[0.079, 0.079, 0.072, 0.072, 0.062, 0.062, 0.107, 0.107, 0.087, 0.087, 0.089, 0.089, 0.079, 0.079], skeleton=[[13, 14], [14, 1], [14, 2], [1, 2], [7, 8], [1, 3], [3, 5], [2, 4], [4, 6], [1, 7], [2, 8], [7, 9], [9, 11], [8, 10], [10, 12]], pose=array([[-1.4 , 8. , 2. ],

[ 1.4 , 8. , 2. ],

[-1.75, 6. , 2. ],

[ 1.75, 6.2 , 2. ],

[-1.75, 4. , 2. ],

[ 1.75, 4.2 , 2. ],

[-1.26, 4. , 2. ],

[ 1.26, 4. , 2. ],

[-1.4 , 2. , 2. ],

[ 1.4 , 2.1 , 2. ],

[-1.4 , 0. , 2. ],

[ 1.4 , 0.1 , 2. ],

[ 0. , 10.3 , 2. ],

[ 0. , 9.3 , 2. ]]), sparse_skeleton=None, dense_to_sparse_radius=2.0, only_in_field_of_view=False, decoder_confidence_scales=None, training_weights=None)]

We see here that CrowdPose has CIF and CAF heads.

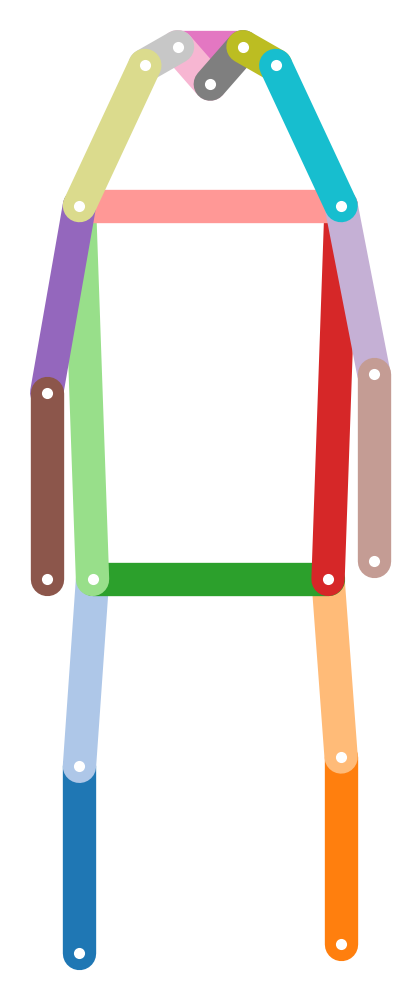

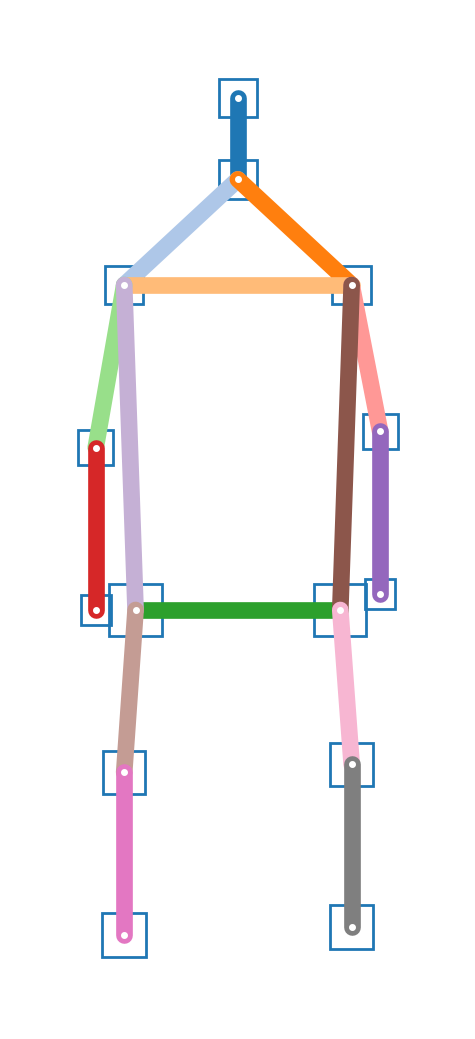

Next, we want to visualize the pose:

# first make an annotation

ann = openpifpaf.Annotation.from_cif_meta(datamodule.head_metas[0])

# visualize the annotation

openpifpaf.show.KeypointPainter.show_joint_scales = True

keypoint_painter = openpifpaf.show.KeypointPainter()

with openpifpaf.show.Canvas.annotation(ann) as ax:

keypoint_painter.annotation(ax, ann)

Prediction#

We use the pretrained model resnet50-crowdpose:

%%bash

python -m openpifpaf.predict coco/000000081988.jpg --checkpoint=resnet50-crowdpose --image-output coco/000000081988.jpg.predictions-crowdpose.jpeg --image-min-dpi=200

INFO:__main__:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.factory:No specific decoder requested. Using the first one from:

--decoder=cifcaf:0

--decoder=posesimilarity:0

Use any of the above arguments to select one or multiple decoders and to suppress this message.

INFO:openpifpaf.predictor:neural network device: cpu (CUDA available: False, count: 0)

INFO:openpifpaf.decoder.cifcaf:annotations 4: [13, 12, 8, 7]

INFO:openpifpaf.predictor:batch 0: coco/000000081988.jpg

src/openpifpaf/csrc/src/cif_hr.cpp:102: UserInfo: resizing cifhr buffer

src/openpifpaf/csrc/src/occupancy.cpp:53: UserInfo: resizing occupancy buffer

import IPython

IPython.display.Image('coco/000000081988.jpg.predictions-crowdpose.jpeg')

Image credit: “Learning to surf” by fotologic which is licensed under [CC-BY-2.0].

Dataset#

For training and evaluation, you need to download the dataset.

mkdir data-crowdpose

cd data-crowdpose

# download links here: https://github.com/Jeff-sjtu/CrowdPose

unzip annotations.zip

unzip images.zip

Now you can use the standard openpifpaf.train and

openpifpaf.eval commands as documented in Training

with --dataset=crowdpose.